This article explores how to design and operate a data and machine-learning architecture for real production environments, where scalability, availability, and disaster recovery matter as much as model accuracy. Through the use of vector databases, distributed architecture, and a cluster-level backup system, we demonstrate how to move from fragile, manual solutions to more reliable, automated operations aligned with business needs.

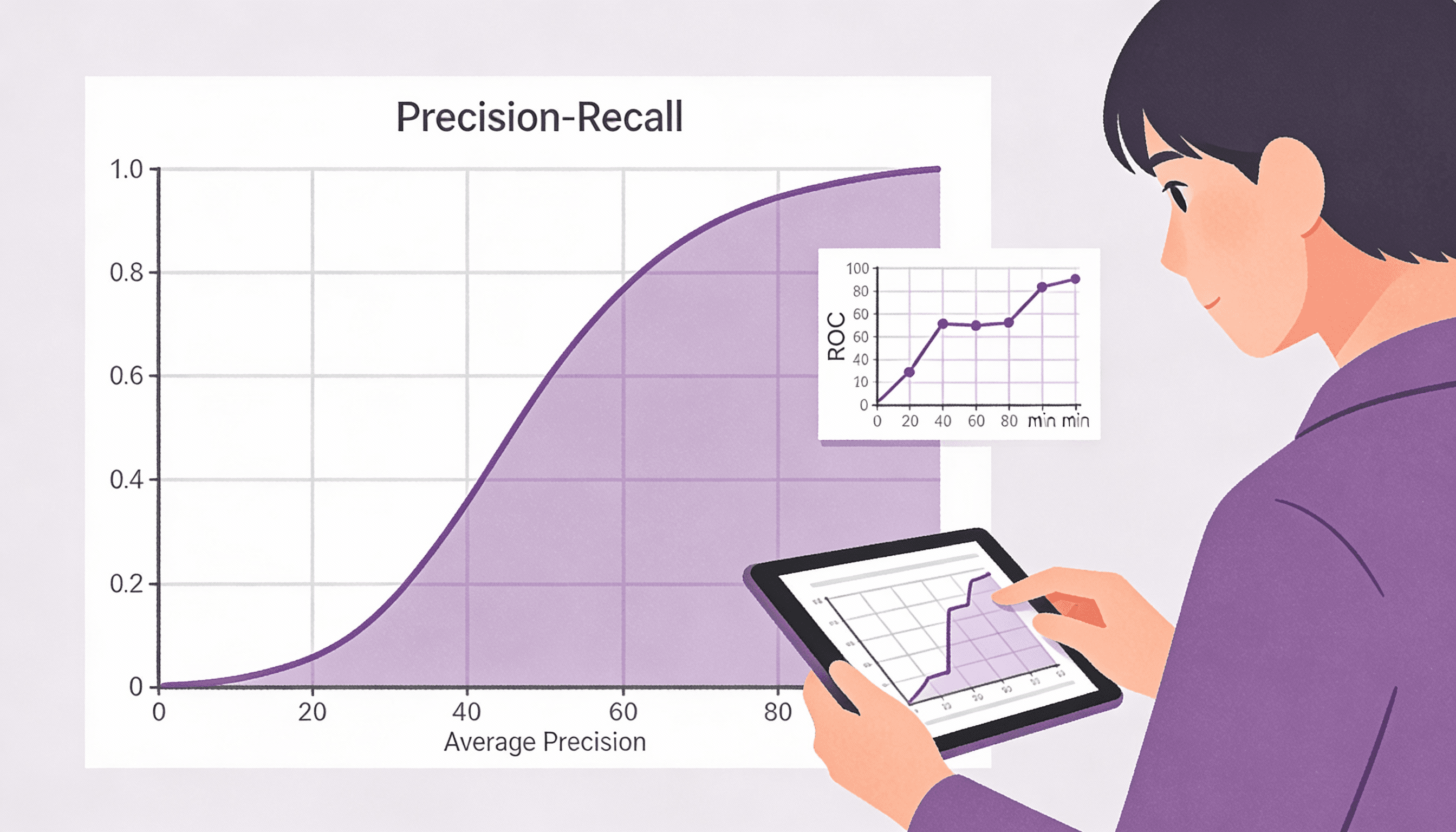

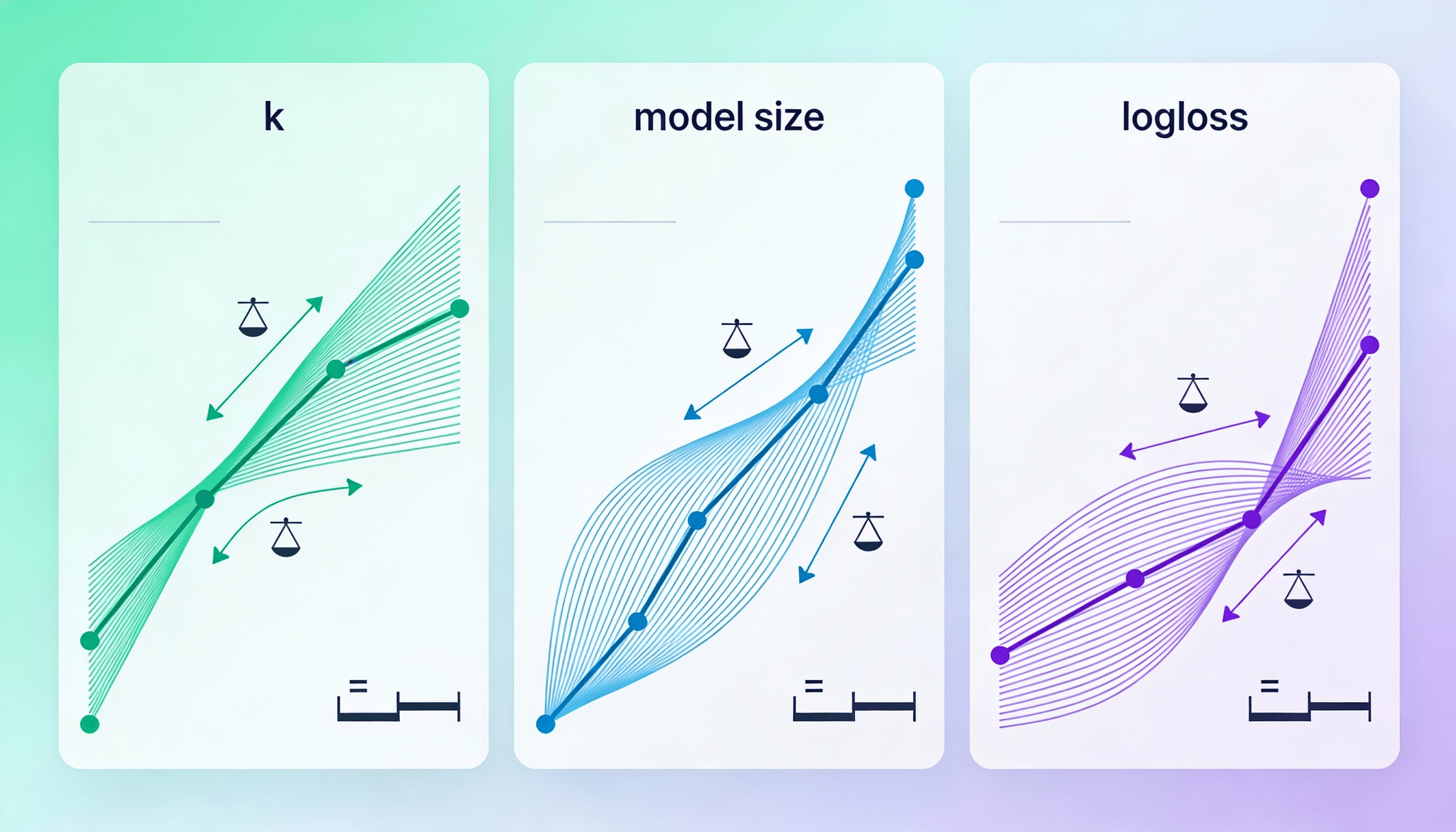

In machine-learning-driven systems, improving a model’s accuracy does not always translate into better productivity. Even small technical changes can have side effects on response times, memory usage, or operational stability.

At Meetlabs, where models are used to make structured, real-time decisions, understanding these trade-offs is as important as improving metric values.

CVR prediction is central to many intelligent systems: it estimates the probability that a user will complete a valuable action (e.g., sign up, purchase, or interact with a product). These predictions drive automated decisions that must execute within milliseconds.

To capture complex patterns in user behavior and context, models use embeddings—numerical vector representations that condense relevant information from categorical variables like users, advertisers, or events.

At Meetlabs we use Field-aware Factorization Machines (FFM), an architecture well-suited for large, sparse data because it balances accuracy and inference speed.

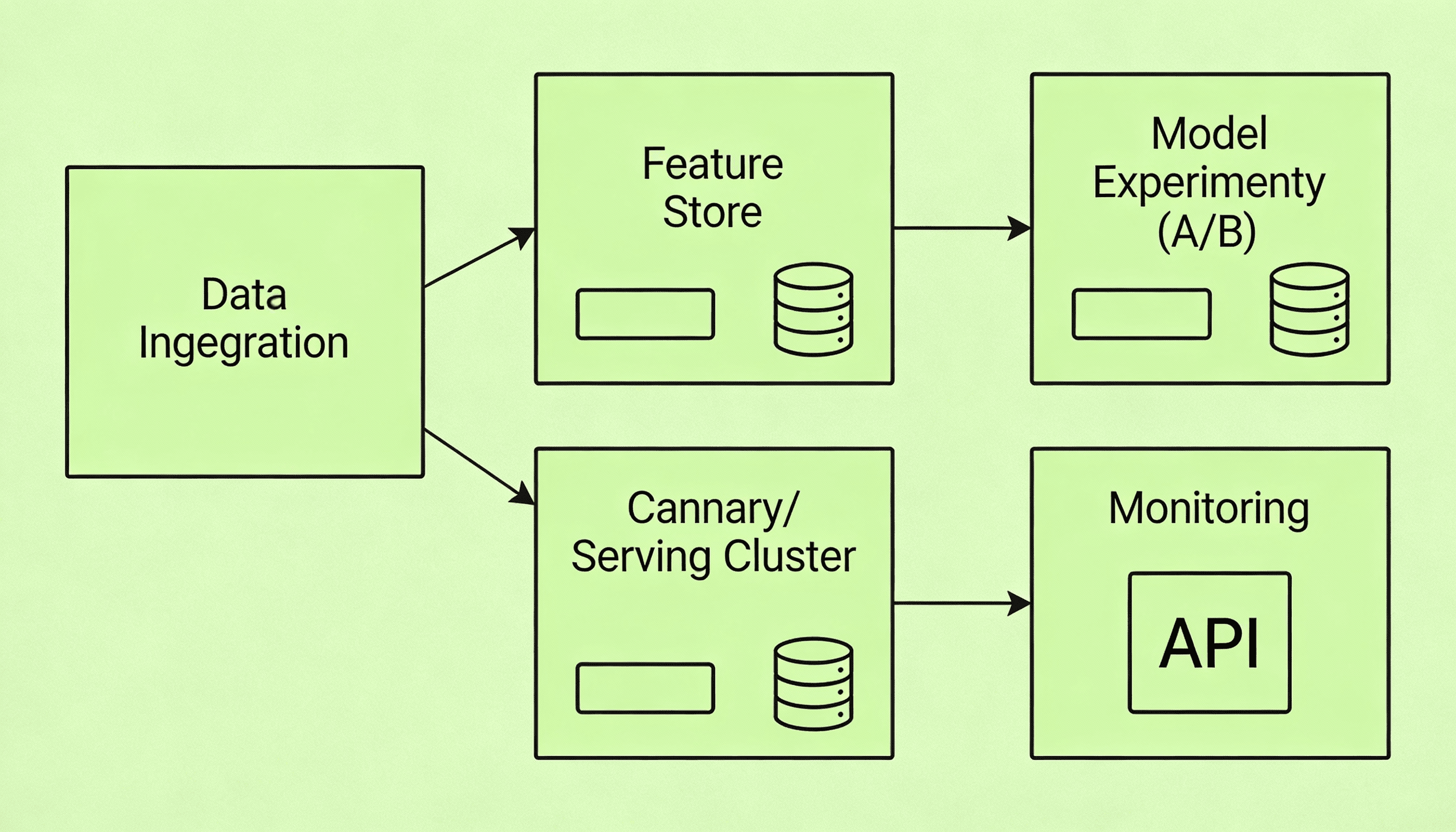

The technical analysis revealed how architectural decisions directly impact system performance. By optimizing how vectors are stored and queried, we achieved a balance between capacity, speed, and resource consumption—enabling AI models to run in production without friction.

The most valuable outcome was understanding how a well-designed infrastructure reduces operational complexity and improves system reliability beyond metrics. This shift enabled moving from reactive management to a more predictable, scalable operation.

The analysis of embedding impact on CVR prediction shows that in production ML systems the best decision is not always to increase model complexity. At Meetlabs, these types of evaluations allow us to make informed choices that balance accuracy, efficiency, and operational stability. Understanding these trade-offs is key to building reliable, scalable AI systems that deliver real business value.